This post might ruffle a few feathers, but so be it. I want

to take a tour of the different types of genealogical software, and consider

what are the real differences and how do they affect different types of user? What

even constitutes genealogical software, and how many different ways can it be

put together?

If you look at typical comparisons, such as Comparison

of genealogy software and Comparison

of web-based genealogy software, then you'll see that they tend to focus on

very specific and rather small-scale features (e.g. single-parent families, or more

than binary choice of sex). They also tend to focus on building trees (which is

only a part of genealogy)

and sometimes show the hallmarks of being compiled by developers rather than by

product managers or journalists.

Unfortunately, traditional categorisations such as desktop

versus web-based may capture the main players but unintentionally exclude the

many niche players out there: products that often provide valuable additional features

or functions to certain classes of user.

Users

So, to begin this exploration, what do we mean by users? You might be thinking experienced

versus inexperienced, but no, I'm thinking about the people who compile and

maintain genealogical data versus those who are simply interested and want to

see something they can relate to.

Let's refer to these as genealogists

and readers. This distinction is not a modern

one, and would have been evident even in the days of printed genealogy.

Nowadays, genealogists will want to perform their own

research and add data to some form of digital storage; usually a database. They

will also want to share it with selected family, extended family, friends, or

the general public, but those readers will have a different set of requirements.

Typically, they will not want to install a special product or add-on, or pay

some subscription, or even have to create a special account, and will expect

access to work on whatever device they are using.

The views that these classes of user see should also be

different. The genealogist will want one suited to maintenance, and which makes

the manipulation of their data easier, while the reader will want a read-only presentational

edition. This difference is analogous to the production of an article using a

word-processor, and then someone looking at a read-only rendering of it without

the menus and buttons used for modifying its content.

Software

Readers of this article will be aware of this term, but few

would be able to give an accurate definition.

Traditionally, it has been the instructions that drive a

computer, including all forms of hardware from mainframes down to embedded

devices, but there are lots of variations on how this can happen; the most

common being that the instructions are written in a high-level programming

language that has to be converted into the binary machine code for the computer

by another software component called a compiler (and yes, that compiler has to

compile itself at some point). Assembly languages (rarer these days) are

similar in having to be run through an assembler, but the associated language

would be considered low-level, i.e. closer to the basic operations supported by

the computer. However, some compilers defer to an assembler to complete the

last step of their conversion. Some systems take the high-level language and

compile it on-the-fly so that it looks like it is being executed directly. Some

systems interpret a script language, and this may be done directly from its

source code (very slow) or from a pseudo-machine code that has been generated

on-the-fly. Finally, some languages are compiled into a pseudo-machine code

that is then executed in a virtual machine, which is itself another software

component.

In this traditional view, software is a set of procedural

data, but there are many types of data, these days, that are declarative rather

than procedural, including mark-up languages (e.g. HTML, XML, or SVG),

cascading style sheets (CSS),

and more. They are informally described as software since they may be essential

to the executable components. More recently, the property of being Turing complete

has been used to define a set of data-manipulation rules as a programming

language.

Configurations

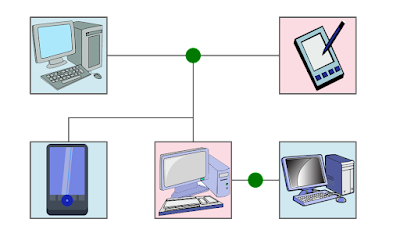

Most software configurations fall into two distinct camps: desktop and web-based, but

there are some other variations to consider.

In a web-based product, there is a component that runs in

your browser (which could be a page involving script code, or an add-on) and

another component running on a remote web server. A wiki

is a form of collaborative web-based product that manipulates multi-media data.

The desktop category invariably includes laptops, too, as

the difference is largely irrelevant, but does not include hand-held devices, such as smart phones and PDAs. Here,

we will use the term local to refer to

software running wholly on any local device that you are in control of.

At the time of writing, the Wikipedia page Comparison

of genealogy software calls this local configuration 'client', but that is

not true as the term has been misappropriated from client-server:

another configuration that is common in fields such as business intelligence but

I'm not aware of any such product in the genealogy field. It involves a local component

and a remote component, communicating via an Internet or other communications

link, and sharing data or processing services. These services provided by the

server component are application-level ones, and so do not typically include

cloud storage or remote databases as they're too general-purpose.

Local products have another aspect to consider which is

whether they can be run offline, with no

external connection from your device. As they generally use local resources

(files, databases, etc) — in contrast to the other two configurations — then it

would be rare, if not a commercial disaster, to mandate such a connection.

Some functionality might be presented as a service, and almost invariably an online one, but we'll

examine this in the section on presentation. We're using this term in the

generic sense, here, rather than for 'web services', which we'll mention later.

Platforms

This category is the hardware and software environments

supported for the product, i.e. the environments within which a product will

run. In the two-component configurations (web-based and client-server), we will

ignore the remote component as it is hidden from the user.

In principle, the operating system

of a machine is separate from the hardware, but many

are tied together in practice. For instance, iOS runs on iPhones, and Windows

runs on Intel hardware — Microsoft did port Windows to other hardware (such as

Alpha AXP) but it was commercially unsuccessful. As a result, we tend to think

in terms of brands for Apple's MacOS and Microsoft's Windows. In either case,

though, the operating-system version is significant,

and this can be attested to by developers who have expended effort to ensure

their product still works under the many changes within Windows 10.

Operating systems such as Android (used in hand-held devices and small

streaming units) and Linux are supported on a wider range of hardware.

If the product runs within a web browser

then we have a similar set of issues with the software variations (e.g. Chrome,

Firefox, Edge, or even the old Internet Explorer) and the associated web-browser versions.

Web-based products rarely require an installation (unless an add-on is involved) but even

local products may get away with no installation being required. This can

depend on the complexity of the product (e.g. being able to rely totally on

system calls rather than on other software libraries or classes) and whether it

includes its own support (products written in Java often include all their own

classes).

An add-on (or

'add-in', or 'plug-in') is an adjunct component that extends the features or

functions of a given product. We have already mentioned them in relation to web

browsers but the concept may also be provided by genealogy software.

Licensing

Software is subject to copyright in many jurisdictions, but

a licence establishes certain rights and restrictions for the usage of a software

product. An end-user license agreement (EULA) is a

contract that has to be accepted in order for the licence to be effective.

That licence may dictate how many users can run your copy of

the product, whether it can be copied or redistributed, whether it can be

re-sold, etc. Even when a product is free then a 'free software licence' may

grant additional rights to reverse-engineer or modify it.

Where there is no physical copy of anything being installed

(e.g. web-based products) then there might be a set of terms and conditions

(or 'terms of use'), a similarly binding contract that covers more than the

EULA (e.g. services).

Where usage of a product or services is not free then a software

license usually involves a one-off cost (although that may not cover version upgrades

— I'm thinking of precedents like Microsoft Windows or Office here), but a web-based

product almost always requires a subscription or pay-per-access.

Royalties are slightly different and

relate to the for-profit usage of some asset, such that the owner of that asset

is paid a percentage of the gains from that usage, or possibly an amount per

unit sold. Software developers can occasionally be paid in terms of royalties

on future sales rather than for their development time. It would be rare to see

this type of payment employed in the field of genealogy software but it could

be applied where histories or trees are published in some way using specialised

tools (see below).

Locales

There are two main aspects to a product supporting other

locales: whether you can enter locale-specific data

(e.g. I am English but have a German ancestor), and the user-interface locale (e.g. the product was created in

the US but I am French). It is often equated with support for different

languages, but support for a locale additionally includes things like dates, calendars,

numbers, and more rarely support for the different structures of place names and personal names.

For the user interface (UI), simply claiming that text data

is held in Unicode is insufficient to also claim the product UI can be made

applicable to other locales (i.e. be localised). Even if text messages have

been translated, there is a lot more to localisation than just the messages

(see

Software Concepts and Standards). For two-component configurations then product

text should not (if well-designed) be coming directly from the server side. For

instance, errors would be signalled by "throwing an exception", or

indicated in a communications response, and an a corresponding client-side

message reported to the end-user.

If a date (or time) is formatted as a readable text value

(computers use ISO or some binary date formats internally) then it should be

appropriate to the end-user's locale, and ideally to their preference too —

locale libraries (NLS)

usually have options for customisable full, long, medium, and short date

formats.

When entering dates relevant to some other locale then the original

textual representation may be unfamiliar (although preferably kept intact as

evidence) but they would be converted to a standardised internal format ...

unless they are not Gregorian dates. For foreign (non-Gregorian) or ancient

calendars then there is no international standard for the digital storage of

their dates, although GEDCOM has a go at supporting them.

One of the traps that a product can fall into when storing

data values in a textual form (e.g. in a GEDCOM file, or even in a settings

file) is storing them in the current locale (for which there can be two

different ones in a two-component configuration) rather than a

locale-independent format. The stored data should always be independent of the

end-user's locale (see programming

locale), and this is especially relevant where there are multiple

genealogists updating the same data.

Evolution

If you're using an old product then you may be accepting

that there will be no further revisions or fixes, but that does not mean that

licensing is then irrelevant. I have tried to get a software licence for a

deprecated and unsupported library, and was quoted such a ridiculous amount of

money that I simply wrote my own version.

There are two aspects to the evolution of a product: active, meaning that new features may still be added in

subsequent versions, and supported, meaning

that problems will be addressed and ideally fixed. These are distinct, and a

product may have reached the end of its active life but still be supported for

a given period of time.

Data Manipulation

Let's consider the types of data that a genealogist can add,

change, or leverage through their software product.

The data might be private

to a single genealogist or to a small selected group of genealogists (as with a

family project or a family history society), and local products fall into this

category. Web-based products could involve either private or shared data. Back in What

to Share, and How, the terminology of "unified trees" and "user-owned

trees" were cited as being used in some blogs. They correspond to the

private and shared terms used here, but we will not specifically consider

"trees" at this point as they are merely a visualisation of some

data.

Lineage is likely to be the thing most

people will associate with genealogy — possibly because of the shared etymology

with genetics — although products

often get sucked into non-lineage complications. The mention of

"single-parent families", at the start of this article, is one

example: we all have two progenitive parents, even if they are unknown.

Including adopted or fostered individuals is another example. In both of these

cases, it's the concept of a family

that causes the complication since lineage, families,

and marriage are all separate concepts that do not necessarily coincide (see Happy

Families).

With many products, and especially ones updating shared

data, it is assumed that the people and relationships being represented are

real (i.e. that they exist, or once did). But they might tentative or

hypothetical if part of a research project, and they might even be fictitious

if you're a writer. Depending on the nature of your research — for instance, a

one-name or one-place study as opposed to a specific family — then the people

and relationships might have to be disjoint, or include incidental people who

were unrelated but still part of the history.

The way a product treats places

can be crucial, depending on the nature of your research. In many products, a

place is simply a string of jurisdictional names — a stance shared by the current

GEDCOM specification. For example, "Americus, Indiana, US". However,

places exist (or existed), have an identify that may need confirming, are part

of a hierarchy (as with person lineage), may have multiple names, have a

history, have documents associated with them, etc. The point being that they

can be treated as historical subjects in a very similar way to people. More

enlightened products can accommodate this depth of properties, and go beyond

some specific place name.

Images, whether photographic or document scans, are probably supported by all products

that maintain genealogical data. But they may be thumbnail images

in a tree visualisation, an image gallery for

a person/family (or for a place), or a portrait image accompanying biographical

details.

It is not unusual these days to have a product help you with

maintaining evidence and citing sources, but this wasn't always the case. It's

definitely a move in the right direction, although the reality often falls

short of an academic standard. On its own, a source reference, and particularly

a simple hyperlink, does no more than identify a source of information. That information needs to support (or refute) a claim for it

to be considered evidence of something, and so an indication of how the

information is relevant to the claim is essential.

A product will usually involve a database

to store all the data that it holds, but this is not true of all products. In

fact, we'll explain in a moment that a database can be a limiting factor. The

question of whether genealogical software actually needs a database at all was

questioned back in Do

Genealogists Really Need a Database?

Databases are good at storing discrete items of information

and indexing them; what they're not good at is handling bulk text, but why is text important? Well, in addition

to documenting real history, it's also needed for making proof arguments and general inferences, and for transcriptions; both of these latter forms requiring rich text (with formatting and mark-up) rather than

plain text. An important comment on this perspective may be found at significant issue, and a brief discussion of different types of text may be found at Types of Text. This is not the same as the simple notes that we're led to believe

is sufficient for our purposes. One reason for ignoring the requirement of real

text — often implying that you just use a word-processor for that — is that bulk

text is not only hard to store effectively but the semantics are also beyond

the control of the software, and woe betide our software letting real

genealogists be in control of their data. Linked to this limitation is the BS

view that software can generate narrative from the discrete items of

information in its database.

When a product uses data that is not of its own creation, or

generates data for explicit consumption by other software, then we refer to it

as import/export. It typically occurs with data

files such as GEDCOM ones, but it can also involve an API

(application programming interface). An API presents a programmatic view of the

external data, and this may involve a communications link. That communication

link can involve web services, which are more of a machine

interface than an application interface.

Presentation

There are many different approaches to publishing

genealogical information for consumption by readers, and this can involve the

product used by the genealogist (via some explicit publishing

operation, or by simply sharing the genealogist's view) or some separate more-specialised

product.

There are some distinct forms that can be generated for

presentation: reports, web pages,

or physical objects. The actual content of

these forms will be discussed below, but as an example, a report here could include

biographical text, images, trees, or all of these.

A genealogist using a local product may be able to view an

on-screen report, but for it to be shared then one option is to generate a printout

of it. The content would obviously be static (with no interaction being

possible) but it can be shared on a very selective basis. Such static reports

could also be emailed to selected readers.

A more dynamic form would be web pages, i.e. *.html or *.htm

files; *.svg files (employing Scalable Vector Graphics) can also be used, but

there are security implications when SVG is used on its own (see What is

SVG? Publishing Trees for Free). Web pages would be viewed in a web browser

and so can interact with the reader to give a richer feel, or to present access

to more parts of the information, but it is harder to control their privacy.

Unless they are behind some gateway requiring authentication then they would

usually be accessible to everyone. Requiring an add-on to be installed is

another way to implement selective privacy but this would deter some readers.

Another option is to email copies of the web pages since the recipients can

then view them privately on their own computer, and with no loss of

functionality. Web-based products might attempt to take shortcuts and simply

present the same view to readers that the genealogist would have, or at least

some slightly limited version of it. If this requires a subscription, or even

an account creation, then it could deter readers. A recent summary of how various familiar products generate

online presentations may be found at 8

Places to Put Your Family Tree Online.

The production of physical objects requires specialised

support, which would be seen as a service rather than a software component, and

hence have service-related payments and conditions. Examples include plaques

involving metal, wood, ceramics, or resin; scrolls and framed prints; and grave

markers exhibiting QR codes to locate online information. Most services in this

category would be online, or at least have an online option, but the days when

we had to send in our image for printing on a memory stick, or even a roll of

film, are not that far behind us.

Books, magazine/journal articles, and blog articles are an

obvious form of publishing, but they are disjoint from our genealogical data.

Although there are tools for creating tree visualisations to include in such

publications, there is no easy route to generate the associated text,

especially if your product uses a database. Even a wiki-based product is

unlikely to offer enough formatting control to produce anything more than a

simple printed article.

But what about the published content itself? The

visualisation of relationships that we term family trees

will be ubiquitous, but there are structural variations (see A

Tree By Any Other Name Would Smell As Sweet) as well as different formats

(e.g. fan charts). Bulk text in the form of

historical/biographical narrative, or images, etc., as identified in the

previous section, may also be involved.

While the published content is unlikely to be in a

proprietary format requiring a specialised viewer (I'm certainly not aware of

any examples), the act of publishing may require extra local software in the

form of a standalone product accepting imported data, or an add-on to the

genealogist's product, or some more complex arrangement (e.g. an add-on

invoking a standalone product).

Possibly an untapped mode of visualisation is graphics that

show geographical movements of people or families over time, or correlate the

locations of people to help establish identities, or link evidence and useful

information to source material (transcribed or scanned).

Conclusion

It should be clear, by now, that simple feature-by-feature

comparisons for local and/or web-based products do not capture the full scope

of existing products, nor do they acknowledge the wide range of possible

functions and configurations. Focus tends to remain fixed on the software used

by the genealogist as though that is a be-all and end-all to genealogical

research, with much less effort and consideration being given to readers. As

James Tanner remarked on his own blog, very recently: You

can't take it with you!